TPDNet: Triple phenotype deepen networks for Monocular 3D Object Detection of Melons and Fruits in Fields

The growth of the global population has increased the demand for fruits and vegetables, while high harvesting labor costs (accounting for 30% to 50% of total costs) severely constrain industry development. Currently, relevant personnel primarily utilize 2D object detection technology to achieve automated harvesting, aiming to reduce labor costs. However, 2D detection technology is limited to providing planar information and cannot meet the requirements of scenarios that need 3D spatial data, whereas 3D object detection technology can effectively address these needs, including point cloud-based methods and monocular-based methods. Since point cloud-based object detection methods require expensive equipment, they are not suitable for low-cost agricultural harvesting scenarios. In contrast, monocular 3D object detection methods have the advantage of only requiring a camera and being easy to deploy. However, there is a lack of specialized monocular 3D object detection datasets and algorithms suited for natural scenes in the agricultural field, which limits the application and development of this technology in agricultural automation. To address this, we construct a 3D object detection dataset for wax gourds and propose a network called TPDNet, which aims to capture the 3D information of objects from a single RGB image for fruits and vegetables in fields. Specifically, since a single RGB image lacks spatial depth information, we construct a depth estimation and enhance module that introduces depth information into the model with the help of depth auxiliary labels, and improves the representation of depth information by utilizing weight information across spatial and channel dimensions. Meanwhile, since depth features and image features are heterogeneous, we design the phenotype aggregation and phenotype intensify module to capture the correspondence between image and depth features, promoting the effective fusion of image and depth information. The experimental results show that our method significantly outperforms others, demonstrating the effectiveness and validity of our proposed method.

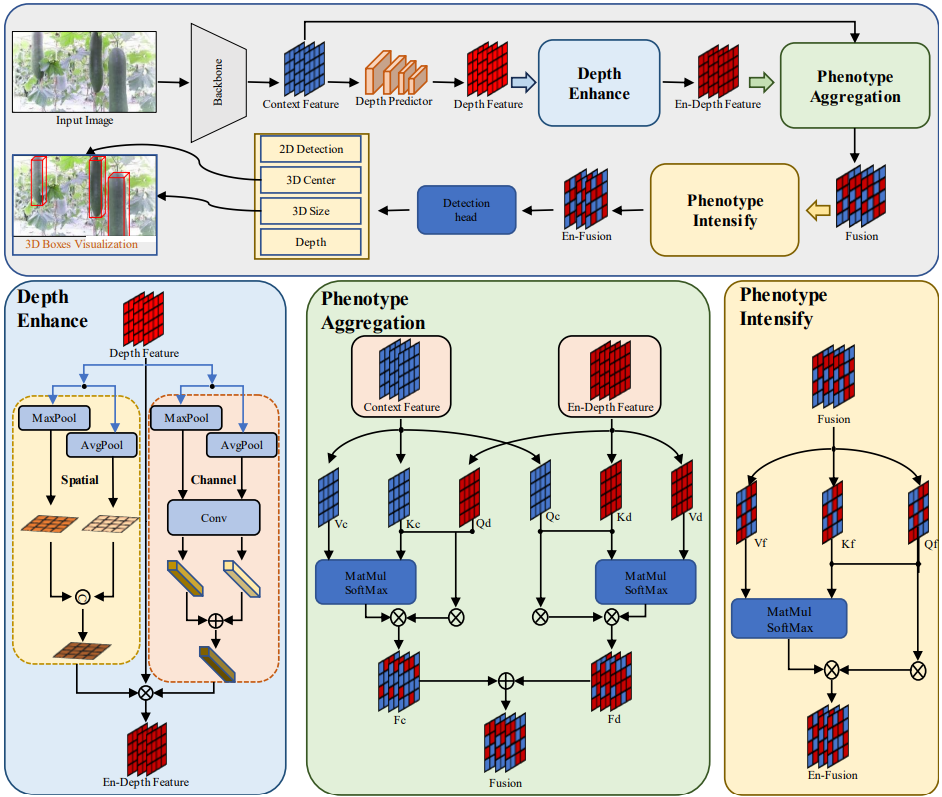

The overall architecture of TPDNet